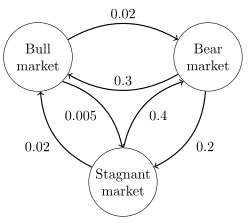

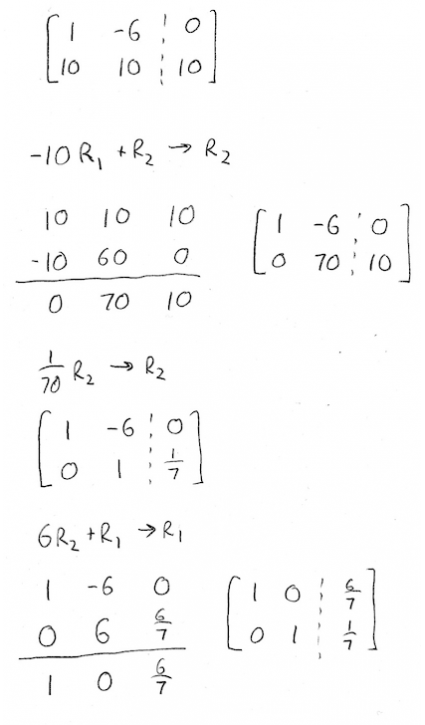

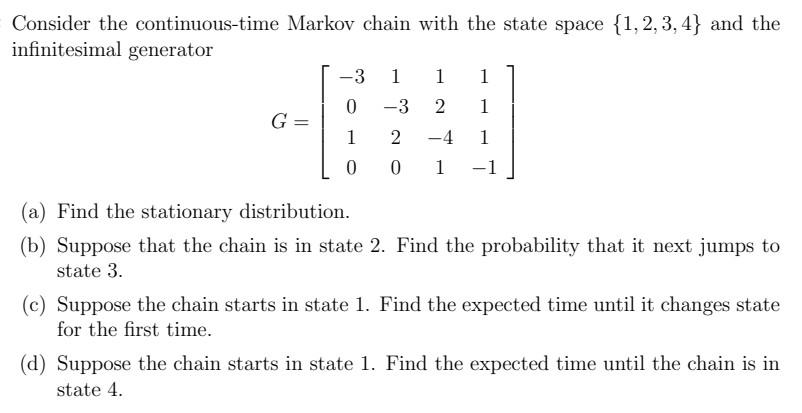

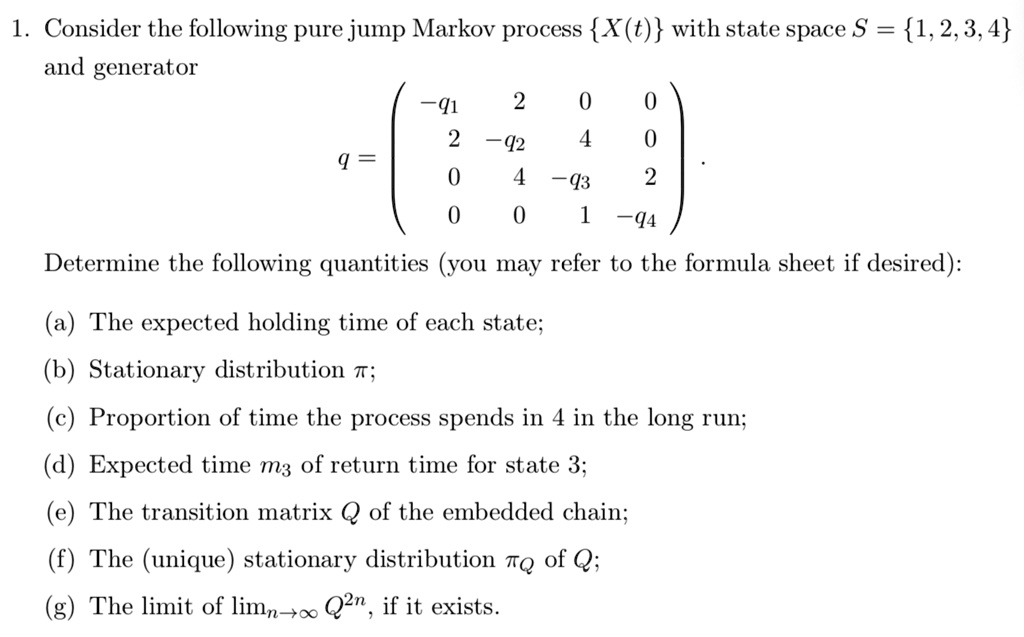

SOLVED: 1 Consider the following pure jump Markov process X(t) with state space S 1,2,3,4 and generator q1 2 -12 -43 -q4 Determine the following quantities (you may refer to the formula

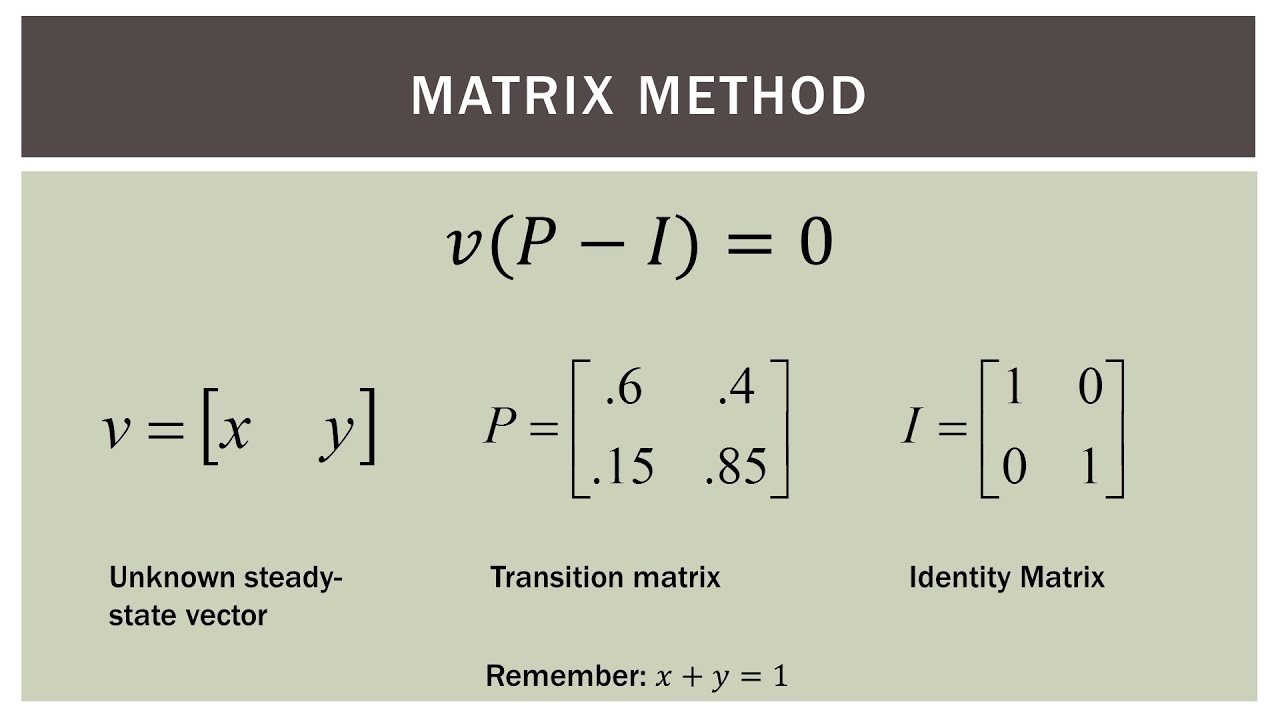

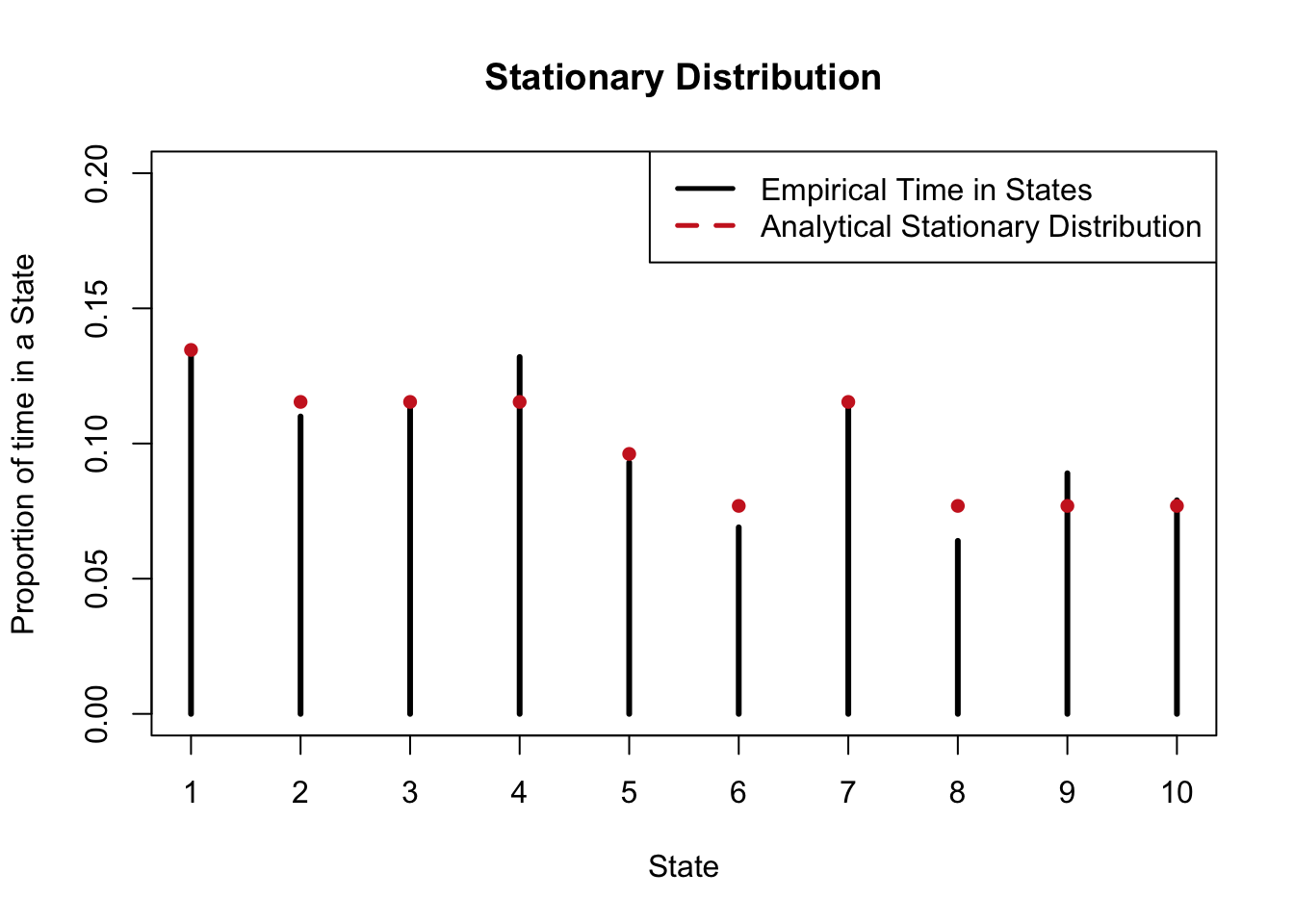

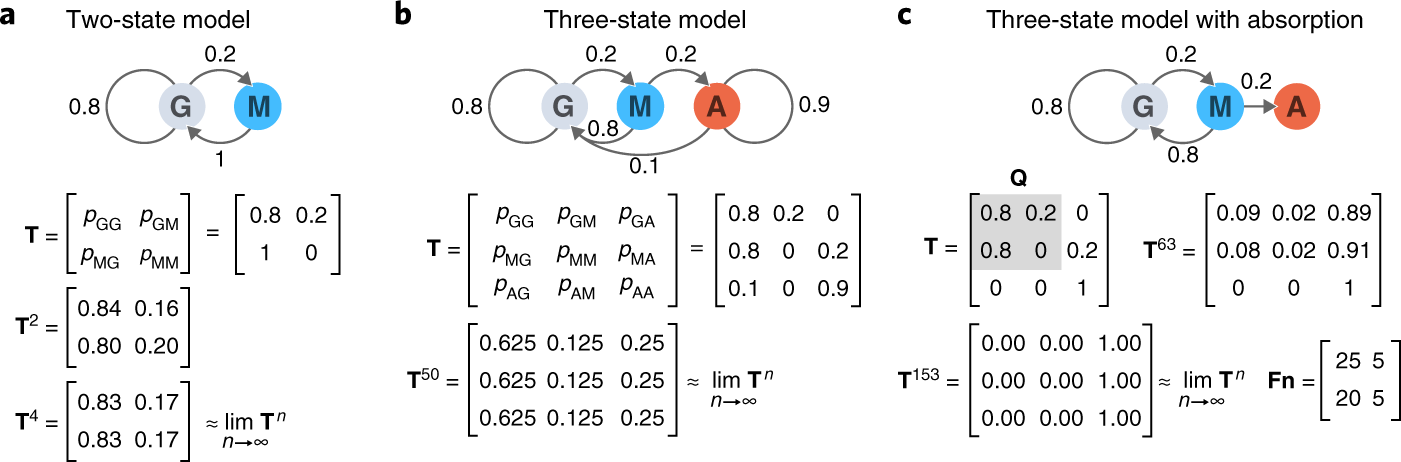

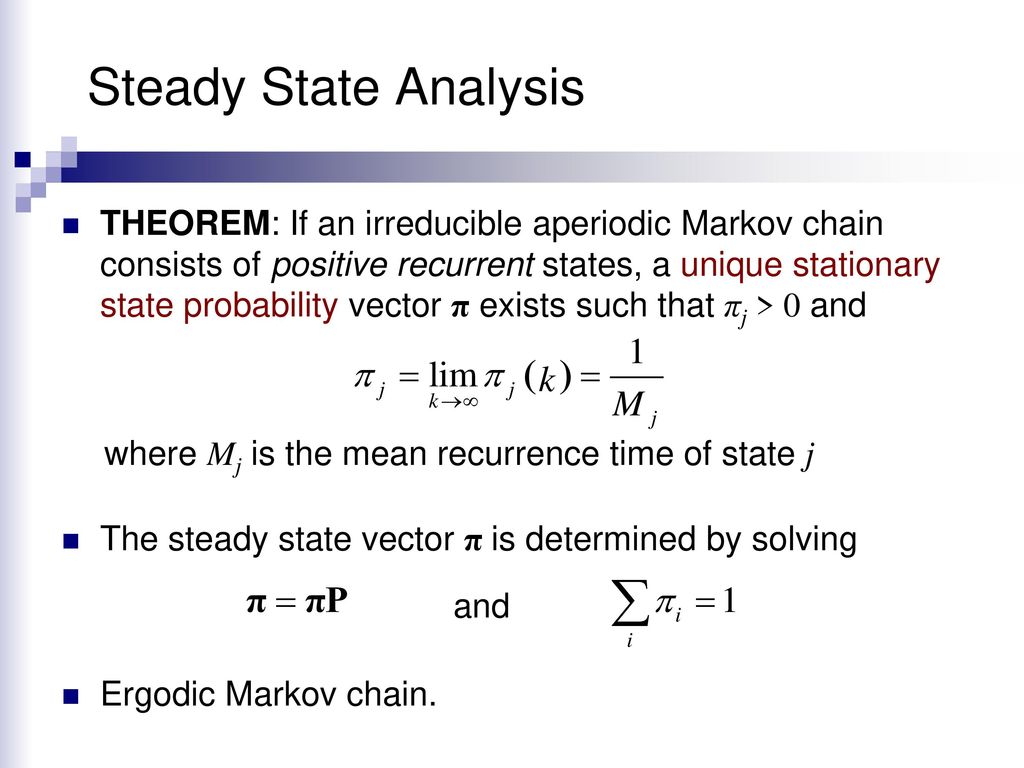

Please can someone help me to understand stationary distributions of Markov Chains? - Mathematics Stack Exchange